Everyone knows that parallelization is a hard but important issue, as it seems that it´s not affordable anymore to increase CPU clock speeds. The future is multi-core! So you should start getting familiar with System.Threading a.s.a.p. ;)

Determine the appropriate balance

When one identifies a parallelizable task, it´s always hard to find the appropriate balance for parallelization. Is it better to open more threads or is it better to give more work to each thread? The answer to that question of course depends on many things, and remarkably on the nature of the task each thread will handle.

It is important to guess the amount of time that task will idle in each thread. If it´s an intensive task, then better start less threads with more work each. If it´s just the opposite (a task that will frequently idle waiting for something -IO, graphics, whatever-), then better open more threads with less work, as they will scheduled through the physical cores of your machine when one is idling. Of course, never open less threads than your machines physical cores!

What´s the objective? The same as in hotels… 100% occupancy.

|

An easy way to determine the nature of our task is to let it run on a single CPU, and see the Task Manager CPU usage history graph. This will give you an idea of the CPU usage your process made. You will ask now how to force your application to run in a specific CPU. The answer is Processor Affinity (see below).

To loose, or not to loose control. That´s the question…

When one starts dealing with parallelization, the first idea is to split processes through the CPUs oneself. Why not? If you have 10.000 operations, then open four threads with 25.000 operations each. First for CPU0, second for CPU1 and so on… I´d feel very comfortable with this idea. Neat and clean, and everything under control, right? Well, it´s not always that simple.

In an ideal world, a single task that is not going to be parallelized anymore and that lives alone (not with the dozens of neighbors a process has in a modern OS), is better handled by a single CPU, as this will increment cache hits and eliminates any thread switching infrastructure overhead. But in real life, processes are interrupted by OS operations, IO, other processes and many other things. A multi-core machine is perfect for handling all that interruptions, as it can spread them all through the existing cores, but if we all start fixing our applications to specific CPUs, the capacity of the OS to avoid locks and waits is heavily reduced.

As this great article explains, most of the times, it is much better to rely onto the Operating System so it can put each thread wherever he wants. However, we´ll see some results regarding this decision later.

Processor affinity of a process

In Windows, you can force a process to run in a specific CPU just using the Task Manager (right click your process and select “Set Affinity”) or programmatically using the System.Diagnostics namespace. The following line will change current process affinity to CPU 1:

System.Diagnostics.Process.GetCurrentProcess().ProcessorAffinity = (System.IntPtr)1;

The ProcessorAffinity property is a bit mask variable. So, the values are:| Value | Allowed processors |

| 0 (0000) | Not allowed (that would mean use no processors) |

| 1 (0001) | Use processor 1 |

| 2 (0010) | Use processor 2 |

| 3 (0011) | Use both processors 1 and 2 |

| 4 (0100) | Use processor 3 |

| 5 (0101) | Use both processors 1 and 3 |

| 6 (0110) | Use both processors 2 and 3 |

| 7 (0111) | Use processors 1,2 and 3 |

| 8 (1000) | Use processor 4 |

| and so on… |

Processor affinity of a thread

A first requirement in order to control how your threads are distributed through CPUs is to be able to set a thread´s affinity (not a process). There is an interesting post about this issue here, where Tamir Khason explains the whole thing. To change a thread´s affinity we must use the System.Diagnostics.ProcessThread class (ProcessAffinity property). The problem comes when one tries to find out which thread is the one we are looking for, in the list of the current process´ threads.First Approach - Deprecated

We get the ProcessThread instance with the following code:

ProcessThread t = Process.GetCurrentProcess().Threads.OfType<ProcessThread>().Single(pt => pt.Id == AppDomain.GetCurrentThreadId());

t.ProcessorAffinity = (IntPtr)(int)cpuID;

The problem with this approach is that the method GetCurrentThreadId is deprecated, so better you don´t use it.

A curious note:Second approach – Not valid

You could be tempted to use the ManagedThreadID to search inside the Threads collection of your process. Don´t do it. ProcessThread.ID has nothing to do with the ManagedThreadID property, they represent different things. A guy says here that ManagedThreadID is in fact the offset inside the ProcessThread collection, but I didn´t investigate any further, and I wouldn´t advise you to do so unless you verify this information

Third approach – Valid but unmanaged

The third approach will “dllimport” kernel32.dll and use some of it´s functions. This method is tested and works correctly. Here we go:

[DllImport("kernel32.dll")]

static extern IntPtr GetCurrentThread();

[DllImport("kernel32.dll")]

static extern IntPtr SetThreadAffinityMask(IntPtr hThread, IntPtr dwThreadAffinityMask);

SetThreadAffinityMask(GetCurrentThread(), new IntPtr(1 << (int)cpuID));

If you are programming for the XBox360 with the XNA Game Studio 3.0, you have a Thread.SetProcessorAffinity method ready for you, without all the garbage above. This is just because the XBox specially needs to take advantage of its cores to give a decent performance. I don´t know if the presence of this method is due to a worse performance of the XBox Scheduler than in Vista… may be. However you can read further here.

The Tests

Task: Generation of three 2D tables of information as a result of a geometric test in a 3D scene, involving 975.065 collision tests (ray-mesh) each

Hardware: Dell XPS 630 QuadCore

Monitoring software: Process Explorer

PART 1 (multithreading disabled). Impact of processor affinity

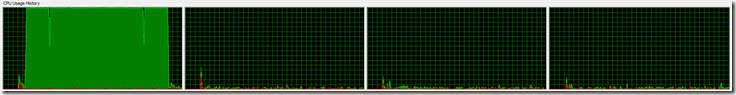

Test 1:- Number of threads: 1 (main thread)

- Processor affinity: CPU 1

- Total time: 2 min 57.11 secs

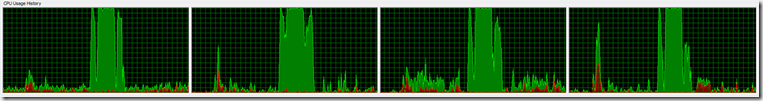

With processor affinity enabled to CPU 1, all the work is obviously handled by this CPU. The two peaks you can appreciate in the graph are due to a IO operation (saving data to disk) and clearly demarcate the generation of each table of data. In this test we can clearly appreciate that our task is very intensive and constant, as keeps the processor at a 100% usage almost all the time.

Test 2:

- Number of threads: 1 (main thread)

- Processor affinity: None (any processor)

- Total Time: 2 min 29.45 secs

Most of the work was handled by CPU 2 but the rest of cores also worked on the process (checked in Process Explorer that all the green lines belonged to the process being measured). It is clear that forcing the thread to work in CPU 1 only introduced locks and waiting periods probably due to interruptions coming from other programs that needed CPU 1 too.

Winner of part 1 ……… Windows Scheduler !

PART 2 (multithreading enabled 1)

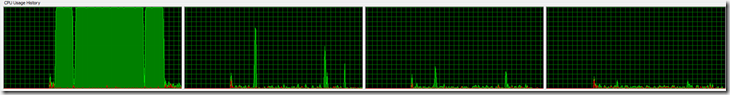

Test 1:- Number of threads: 2 (main thread + 1 calculation thread)

- Processor affinity:

- Main thread: Any

- Calculation thread: CPU 1

- Total time: 2 min 18.14 secs

The results we are getting here are quite logical. The main change in this test is that we are separating the calculation from UI update and IO saving to disk. You can appreciate the low peaks in the first graph and their equivalent in cores 2 and 3 (where the saving operation is placed). It is very interesting to note that separating the saving operation to different cores doesn´t save any time, because we wait for it to complete before continuing with the next table of data. That´s why now the low peaks in the first graph are much more noticeable. We have moved some computing from one core to another, but not parallelized anything.

However, we get a small performance improvement, mostly because now the UI update (which involves some Bitmap manipulation) is now done at cores 2 and 3

Test 2:

- Number of threads: 2 (Main thread + 1 calculation thread)

- Processor affinity: None

- Total time: 2 min 14.86 secs

This time, we can again appreciate that work has been scattered through all cores, with a more remarkable presence of CPU 2. Again, the Windows scheduler wins the race.

Winner of part 2 ……… Windows Scheduler !

PART 3 (multithreading enabled 2)

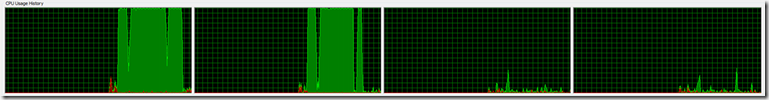

Test 1:- Number of threads: 3 (main thread + 2 calculation threads)

- Processor affinity:

- Main thread: Any

- Calculation threads: CPUs 1 and 2

- Total time: 1 min 18.66 secs

We start to see a big performance improvement here. Twice the computing power, almost twice faster. That seems quite realistic.

Test 2:

- Number of threads: 3 (main thread + 2 calculation threads)

- Processor affinity: None

- Total time: 1 min 16.59 secs

Another win for the Windows Scheduler. Obviously when the total time gets shorter, the differences too, but the OS still wins.

Winner of part 3 ……… Windows Scheduler !

PART 4 (multithreading enabled 3)

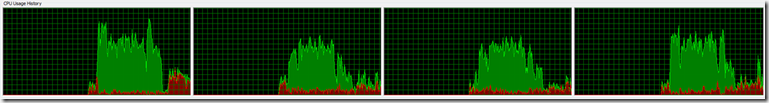

Test 1:- Number of threads: 5 (main thread + 4 calculation thread)

- Processor affinity:

- Main thread: Any

- Calculation threads: CPUs 1, 2, 3 and 4

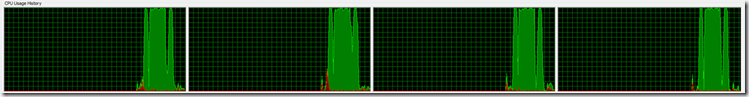

- Total time: 41.76 secs

Now comes the huge performance improvement. With 4 calculating threads, the total time gets reduced to 41 secs!. Let´s see how the OS performs with 5 threads.

Test 2:

- Number of threads: 5 (main thread + 4 calculation thread)

- Processor affinity: None

- Total time: 42.05 secs

Wow… That was too close! This time we must mark the OS as looser.

Winner of part 4 ……… Processor Affinity! (it was close)

Test 1:

- Number of threads: 9 (main thread + 8 calculation threads)

- Processor affinity:

- Main thread: Any

- Calculation threads 1..4: CPUs 1..4

- Calculation threads 5..8: CPUs 1..4

- Total time: 41.07 secs

Test 2:

- Number of threads: 9 (Main thread + 8 calculation threads)

- Processor affinity: None

- Total time: 38.24 secs

Wow… this is my boy! 38 secs !!!

However, this are expected results. If you set more threads than physical cores, it is obvious that some thread scheduling should have to be done. Forcing threads to work on a certain CPU just brings down parallelization. As you can see we get almost no benefit when using 8 calculation threads instead of 4 (if affinity is enabled). So it´s clear that giving some freedom to Windows here, just to do its job, is by far the best option.

Winner of part 5 ……… Windows Scheduler !

Results

| Test | Vista Scheduler | Processor Affinity |

| Part 1 | 149.45 secs | 177.11 secs |

| Part 2 | 134.86 secs | 138.14 secs |

| Part 3 | 76.59 secs | 78.66 secs |

| Part 4 | 42.05 secs | 41.76 secs |

| Part 5 | 38.24 secs | 41.07 secs |

So, what´s the optimal number of threads for my task?

Does this tendency (the more threads, the higher performance) continues forever? The answer is, obviously, no.In an ideal, 100% intensive and constant task, the optimal number of threads would be the number of physical cores, but in real life, such an intensive task is very difficult to find. Almost every computation algorithm will have idle times, waiting for a memory paging operation or whatever. So, the number of threads that will give you top performance will depend on the intensiveness and constancy of your application.

I have measured some additional timings (for the OS scheduler version only):

- 16 threads –> 37.89 secs.

- 18 threads –> 37.44 secs.

- 24 threads –> 38.03 secs.

Conclusions

1.- The Windows Scheduler does a GREAT job (specially in Vista). It beats a manual processor affinity setup almost in every cases, and in those where a manual setup wins the race, it´s by a very short distance.2.- Even if the OS was a little bit worse in all cases would be advisable to use it, mostly because it´s automatic and you don´t have to worry about your thread´s location

3.- Processor affinity is one of the most important parallelism blockers, so use it if you really need it only, not because you are smarter than Vista. In other words: do not re-invent the wheel. Trust the OS wisdom.

4.- Windows Vista Scheduler Rocks !

What´s all this information been used for?

Almost one million ray-mesh intersection tests, and what´s this stuff all about? Some people here in Spain says that if it´s white, and comes in a bottle, it´s probably milk… ;)

Massive Ray-Mesh intersection tests + Results stored as 2D table of data = Probably lighting calculations

|

This are the real results… hope you like it.

Take care!