All examples I found were based on the interop between XAML and DirectX, but if you go that path, you´ll need to rely on the CompositingTarget.Rendering event to handle your updates and renders, which lets all the inner processing to Windows.UI.XAML and limits the frame rate to 60 Hz.

So, how to create your own, unlocked-framerate-ready, main loop?

First things first:

Dude, where's my Main entry point?

Typically, a C# application has a Program static class where the "main" entry point is defined.When you create a WPF, UWP or Windows Store application though, aparently that code is missing, and the application is started magically by the system.

The truth is that the main entry point is hidden in automatically-generated source code files under the obj\platform\debug folder. If you look there for a file called App.g.i.cs, you'll find something like this:

#if !DISABLE_XAML_GENERATED_MAIN /// <summary> /// Program class /// </summary> public static class Program { [global::System.CodeDom.Compiler.GeneratedCodeAttribute("Microsoft.Windows.UI.Xaml.Build.Tasks"," 14.0.0.0")] [global::System.Diagnostics.DebuggerNonUserCodeAttribute()] static void Main(string[] args) { global::Windows.UI.Xaml.Application.Start((p) => new App()); } } #endif

Et'voila... There's your entry point.

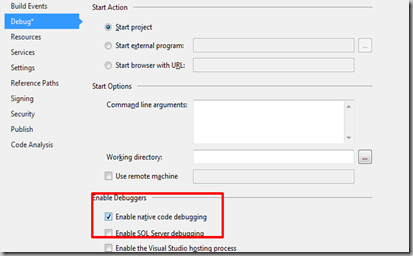

So first thing is to define the DISABLE_XAML_GENERATED_MAIN conditional compilation symbol to prevent Visual Studio from generating the entry point for you.

Next, is to add your own Program class and main entry points, as always, so you have control on the application start procedure. You can simply copy-paste that code anywhere in your project.

The Main Loop

Please note: this implementation is inspired by the C++ equivalent described here.Now that you have a main entry point, you can replace the invokation of Windows.UI.Xaml.Application.Start (which internally deals with its own main loop) with your own code.

What we want to use instead is: Windows.ApplicationModel.Core.CoreApplication.Run, which is not bound to XAML and allows you to define your own IFrameworkView class, with your custom Run method.

Something like:

public static class Program { static void Main(string[] args) { MyApp sample = new MyApp(); var viewProvider = new ViewProvider(sample);

Windows.ApplicationModel.Core.CoreApplication.Run(viewProvider);

}

}

The ViewProvider class

public class ViewProvider : IFrameworkViewSource { MyApp mSample; public ViewProvider(MyApp pSample) { mSample = pSample; } // // Summary: // A method that returns a view provider object. // // Returns: // An object that implements a view provider. public IFrameworkView CreateView() { return new View(mSample); } }

The View Class

public void Run() { var applicationView = ApplicationView.GetForCurrentView(); applicationView.Title = mApp.Title; mApp.Initialize(); while (!mWindowClosed) { CoreWindow.GetForCurrentThread().Dispatcher.ProcessEvents(

CoreProcessEventsOption.ProcessAllIfPresent);

mApp.OnFrameMove();

mApp.OnRender();

}

mApp.Dispose(); }

This removes the need to rely on XAML stuff in your game (and its overhead), gives you more control about how the mainloop behaves, and unleashes the possibility of rendering with a variable frame rate in C#.

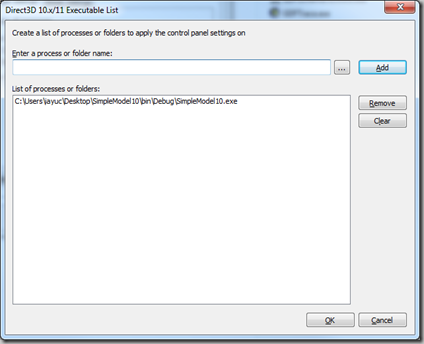

Please refer to this page for further info about how to initialize a swap chain suitable for unlocked frame rates.

Hope it helps!