This article tries to be an introduction on .Net memory management and about the memory limits both the Runtime and the platform establish for each process. We will also give some tips about dealing with the problems you will face when reaching those limits.

Available memory for a process

As you already know, no matter how much physical memory you install in a computer. Your application will face several issues that will limit the actual memory available for it.

For instance,

a 32 bit system cannot have more than 4 GB of physical memory. Needless to say that 2^32 will give you a virtual address space with 4.294.967.296 different entries, and that’s precisely where the 4GB limit comes from. But even having those 4GB available on the system, your application will actually be able to

see 2GB only. Why?

Because on 32 bits systems, Windows splits the virtual address space into two equal parts: one for User Mode applications, and another one for the Kernel (system applications). This behavior can be overridden by using the “/3gb” flag in the Windows

boot.ini config file. If we do so, the system will then reserve 3GB for user applications, and 1 GB for the kernel.

However, that won’t change the fact that we will be able to

see only 2GB from our application, unless we explicitly activate another flag in the application image header: IMAGE_FILE_LARGE_ADDRESS_AWARE. The combination of both flags on a 32bit Operating System is commonly known as:

4GT (4 GigaByte Tuning).

Surprisingly on

64 bit environments, the issue is pretty similar. Even though these systems don’t suffer from the same limitations about physical memory or reserved address space for the kernel (in fact, in those systems the /3gb flag doesn’t apply), processes hit with the same wall when trying to address more than 2 GB. Unless the same flag is set for the executable (IMAGE_FILE_LARGE_ADDRESS_AWARE), the limit will be always the same by default.

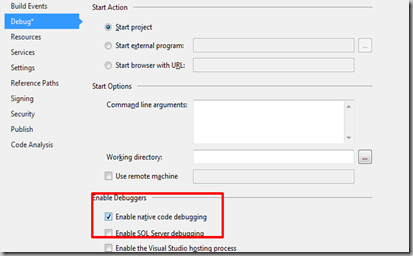

Activating the flag: IMAGE_FILE_LARGE_ADDRESS_AWARE

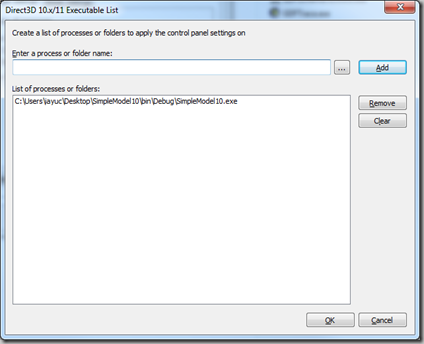

- In native, Visual C++ application, it’s pretty straightforward to set that flag, as Visual Studio have an option for that. You just need to set the /LARGEADDRESSAWARE Linker parameter, and you are ready to go.

- In C#, .Net applications:

- Applications compiled as 64bit will have that flag set by default, so you will already have access to a 8TB address space (depending on O.S. versions)

- Applications compiled as 32bits will need to be modified with the tool called EditBin.exe (distributed with Visual Studio). This tool will set the appropriate flag to your EXE, allowing your application to access a 4GB address space if running in a 64bit Windows, or to a 3GB address space if running in a 32bit Windows with the 4GT tuning enabled.

Next table (taken from

here), summarizes the limits in virtual address space, depending on the platform and on the kind of process we are running:

This page

This page has much more info on the issue.

System memory limits. Closer than you expect

Nowadays, memory is cheap. However, as explained in the previous chapter, there are many situations where you will end up having only 2 GB available, despite the total amount of physical memory installed in your PC.

In addition to that, if your application is being developed in .Net, you will find that the Runtime itself introduces a remarkable memory overhead (around 600-800 MB). So, it’s not strange to start receiving OutOfMemory exceptions when reaching 1.2 or 1.3 GB of memory used.

This blog talks further about this.

So, if you are not in one of those cases, where the address space is expanded beyond 2 GB, and your are developing in .Net,

your actual memory limit will be around 1.3 GB.

That’s more than enough for 99% of applications, but others, like intensive computing apps or those related to databases, may need more. Way more…

And things get even worse…

To make things even more complicated, you will soon learn that one thing is having some amount of memory available, and another, completely different story is to find a

contiguous block of memory available.

As you all know, as a result of O.S. memory management, techniques like

Paging and the creation and destruction of objects, memory gets more and more

fragmented. That means that even though there is a certain amount of free memory, it is scattered through a bunch of small holes, instead of having a single, big chunk of memory available.

Modern Operating Systems and the .Net platform itself apply methodologies to prevent fragmentation, like the so called

Compaction (moving objects in memory to fuse several free chunks of memory into a single, bigger one). Although these techniques reduce the impact of fragmentation, they do not eliminate it completely.

This article describes in detail the .Net

Garbage Collector (GC) memory management, and the compaction task it performs.

In the context of this article, fragmentation is a big issue, because if you need to allocate an 10 MB contiguous array, even if there’s 1 GB of free memory available for your process, you will receive an

OutOfMemory exception if the system cannot find a contiguous chunk of memory for the array. And this happens more frequently than you may expect when you deal with big arrays.

In .Net, fragmentation and compaction of objects is tightly related to object’s size, so let’s talk a bit about that too:

Allocation of big objects

Maybe you don’t know it, but all versions of .Net until the last one (1.0, 2.0, 3.0, 3.5 and 4.0) have a limit on the maximum size a single object can have: 2 GB. No matter if you are running in a 64bit or 32bit process, you cannot create anything bigger than that, in a single object. It’s only since version 4.5 when that limit has been removed (for 64 bit processes only). However, besides very few exceptions, you are very likely applying a wrong design pattern to your application if you need to create such a big objects.

In the .Net world, the GC classifies objects into two categories: small, and large objects. Where you expecting something more technical? Yeah, me too… But that’s it. Any object smaller than 85000 bytes is considered

small, and any object larger than that is considered

large. When the CLR is loaded, the

Heap assigned for the application is divided into two parts: the SOH (Small Objects Heap) and the LOH (Large Objects Heap). Each kind of object is stored on it’s correspondent Heap.

It’s also remarkable to say that

Large object’s compaction is very expensive, so it’s directly not done in current versions of .Net (developers said that this situation might change in the future). The only operation similar to compaction done with

Large objects is that two adjacent dead objects are fused together into a single chunk of free memory, but no

Large object is currently moved to reduce fragmentation.

This fantastic article has much more information about the

LOH.

C# Arrays when reaching memory limits

Simple Arrays (or 1D arrays) are one of the most common ways of consuming memory in C#. As you probably know, the CLR always

allocates them as single, contiguous blocks of memory. In other words, when we instantiate an object of type byte[1024], we are requesting 1024 bytes of contiguous memory, and you will get an

OutOfMemory exception if the system cannot find any chunk of contiguous, free memory with that size.

When dealing with multi-dimensional arrays, C# offers different approaches:

Jagged arrays, or arrays of arrays: [][]

Declared as

byte[][], this is the classical solution to implement multi-dimensional arrays. In fact, it’s the only approach natively supported in languages like C++.

With regards to memory allocation, they behave as a simple array of elements (one block of memory), where each one of them is another array (another, different block of memory). Therefore, an array like byte[1024][1024] will involve the allocation of 1024 blocks of 1024 bytes memory each.

Multi-Dimensional Arrays: [,]

C# introduces a new kind of arrays: multi-dimensional arrays, declared like

byte[,].

Although they are very comfortable to use and easy to instantiate, they behave completely different with regards to memory allocation, as they are allocated in the

Heap as a single block of memory, for the total size of the array. In the previous example, an array like byte[1024, 1024] will involve the allocation of one single, contiguous block of 1 MB.

In the next chapter we will make a quick comparison of both types of arrays:

Comparison: [,] vs [][]

2D array [,] (allocated as a single block of memory):

Pros:

- Consumes less memory (no need to store references to all N blocks of memory)

- Faster allocation (allocating a bigger, single block of memory is faster than allocating N, smaller blocks)

- Easier instancing (enough with one single line: new byte[128, 128])

- Useful tool methods, like GetLength(). Cleaner and easier usage.

Cons:

- Finding a single block of contiguous memory for them might be a problem, specially if dealing with big arrays, or when reaching memory limits for your process

- Accessing elements in the array is slower than in jagged arrays (see below)

Jagged arrays [][] (allocated as N blocks of memory):

Pros:

- It’s easier to find available memory for this kind of arrays, because due to fragmentation, it’s more likely that there will be N blocks of smaller size available than a single, contiguous block of the full size of the array.

- Accessing elements in the array is faster than in 2D arrays, mostly because the optimizations in the compiler for handling simple, 1D arrays (after all, a jagged array is composed of several 1D arrays).

Cons:

- Consumes a bit more memory than 2D arrays (need to store references to the N simple arrays).

- Allocation is slower, as it needs to allocate N elements instead of a single block

- Instancing is uncomfortable, as you need to loop through array elements to instantiate them too (see below for tip)

- Doesn’t provide with tool methods, and might be a bit more complex to read and understand

This blog have a great comparison about them too.

Conclusion

Each user should decide which kind of array fits best the specific case he is dealing with. However, a developer that usually needs big amounts of memory, and who cares more about performance than comfort, ease of use or readability, will probably decide to use Jagged arrays ([][]).

Tip: code to automatically instantiate a 2D, jagged array

Instancing a multi-dimensional jagged array can be disturbing, and repetitive. This generic method will do the work for you:

public static T[][] AllocateArray2D<T>(int pWidth, int pHeight)

{

T[][] ret = new T[pWidth][];

for (int i = 0; i < pHeight; i++)

ret[i] = new T[pHeight];

return ret;

}

Hope it helps !!